My three favorite parts of the System Center suite are Configuration Manager, Data protection Manager and Endpoint Protection. These three products work well at making most of the chores of running an IT environment lighter

Configuration Manager & Endpoint Protection

This is, in my opinion, the flagship product of the System Center Suite. Management of servers, workstations and even mobile devices is completed here and with Service Pack 1 an impressive list of operating systems and devices are supported including Linux and Mac OS. The mobile device manager has now been brought into Configuration manager as well. It is also within Configuration manager that you should deploy and manage Endpoint Protection. Endpoint Protection was formally known as Forefront Protection, and I really hope this product continues being supported and isn’t eventually dropped like other forefront products have been, such as TMG. If you are lucky enough to have the standard or Enterprise CAL already (and you really should if you are looking at System Center) then it might be that you can save a fair bit of money by ditching your current Antivirus vendor and moving to Endpoint.

Typically in the past I would have used standard windows deployment from a share or USB volume or another vendor’s solution such as Ghost as the configuration manager effort wasn’t always worth the reward. Deployments are now a lot easier and when tied with a decent collection of drivers and task sequences it is simple to quickly cater for a new situation or model of desktop or server.

Data Protection Manager

In my experience no backup solution is perfect and generally each has its strength and weaknesses. With the 2012 iteration of Data protection manager the Microsoft offering is looking to be more of the former and less of the latter.

DPM is great at backing up Microsoft own products and applications and I have been using it to back up nearly 2TB of Exchange data and a large SharePoint Farm as well.

DPM offers many of the features an enterprise backup solution should, such as continuous protection, differential and incremental backups as well as Disk to disk and Disk to Tape backups. I feel that it is only in the scheduling and retention options that DPM starts to fall down. Typically I like to keep daily data for a month, weekly data for 2-6 months, month end for 2 years and year end data for even longer but unfortunately the retention a scheduling options don’t really cater for this approach, you simply have a hard limit on how long you can retain backup data with disk to disk used for short term and a second schedule for long term tape backups. This leads to me using a different product to perform end of month backups simply so that I can keep them for longer than the other tape backups.

Generally DPM performs very well and can perform backups in shockingly fast order but it can have a tendency to occasionally mark replicas as bad or fail a snapshot only for it to succeed later without issue. Quite possibly a quirk of the environment I have evaluated it in but something which seems to happen with other solutions a lot less often. Also on upgrading on to sp1 be prepared to check the consistency of every replica and build the time taken for this into your upgrade plan.

Service pack 1 is definitely worth the upgrade as it sees a number of feature improvements such as support for deduplicated volumes and is the final piece in the puzzle to getting dedupe working on cheap hardware. With Windows Server 2012 and Data Protection Manager you can use deduped volumes without the need to buy expensive storage solutions and licenses. The useful extra features don’t end there, Cluster shared volumes can now be backed up as well as continuous protection of Hyper-V guest machines even while they are being live migrated.

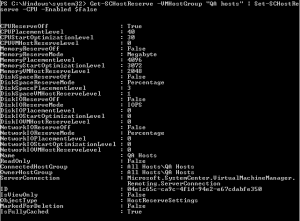

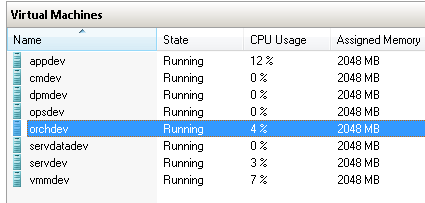

Virtual Machine Manager

Virtual Machine Manager is to Hyper-V as vCentre server is to VMware. VMM is the only real additional software cost you will have to bare if you want to use a full Hyper-V clustered solution. (God help anyone who wants to manage a large cluster of Hyper-V hosts as individual servers) The thought obviously being that if Microsoft gives you the Hyper visor for free you won’t balk at paying for the management tools and I expect a good number of people will buy the System Center Suite simply to be able to run Virtual Machine Manager. If this sounds like you I hope you at least try the other parts of the System Center suite as they are worth a good look.

Orchestrator

Orchestrator is the centre point of the System Center suite and ties all of the other products together to make an intelligent workflow based automation solution. It is based on software Microsoft acquired when it purchased Opalis back in 2009.

Orchestrator makes sense in the larger environments or when a requirement for automation is present such as in managed hosting. It will likely be less use in smaller environments as the time taken to configure and automate tasks won’t have quite the same payback.

With Orchestrator it is possible to automate almost anything from deploying VMs through to recovering from an error condition in a service. You can even find Integration packs from various vendors which lets you control and automate them from Orchestrator.

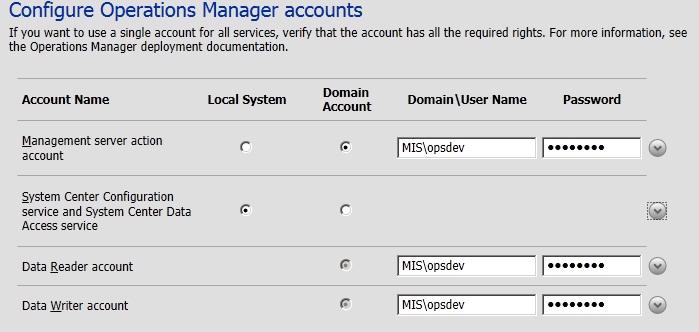

Operations Manager

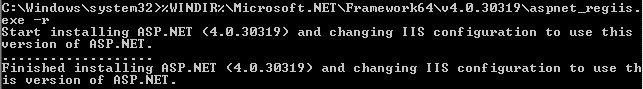

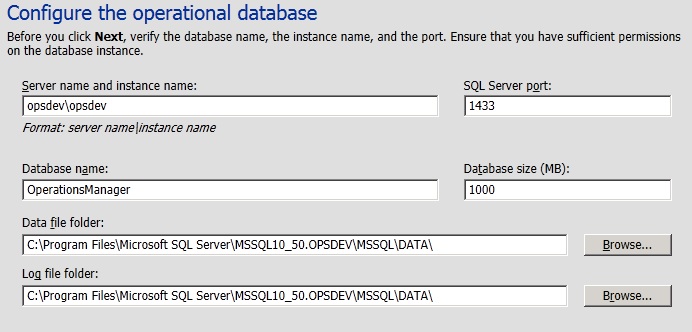

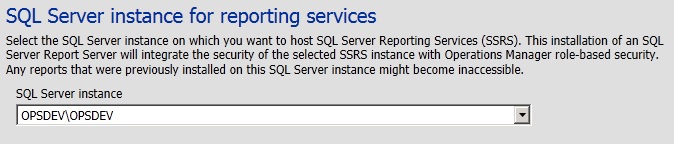

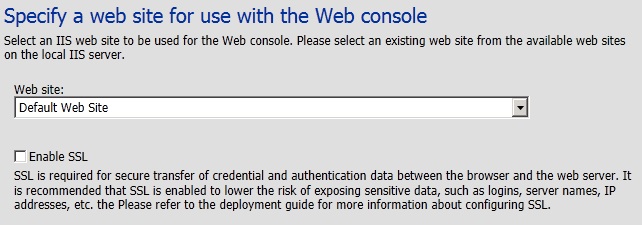

Operations Manager is Microsoft monitoring and alerting system and in the latest version it does a lot more than peer into event logs and give you a huge list of errors. As with the rest of the System Center Suite Service Pack 1 introduces support for Linux which enhances the appeal of Operations manager a little and the list of supported applications and devices seems to be constantly growing as well. No doubt pure Linux environments will be running Nagios or something similar but for mixed or pure Microsoft environments Operations manager is definitely one of the best out there.

Service Manager

Service Manager is the System Center component I have spent the least time looking at. It is hard to get excited about help desk solutions, especially when so many spend so long logged into them. Possibly the best feature of Service Manager is the auditing and reporting. If correctly configured with orchestrator Service Manager can help you to identify why problems are occurring or when changed were made which could have contributed to an issue. Service Manager doesn’t feel like the kind of product people would buy on its own, but if you have already paid for the full System Center suite you would have to be silly not to at least try it and as with all of the other solutions mentioned here, generally the longer you use them, the more you come to realise how powerful and they are.

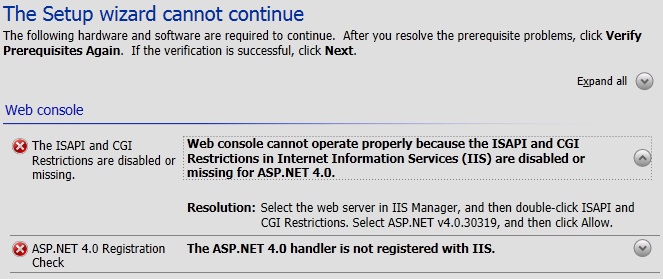

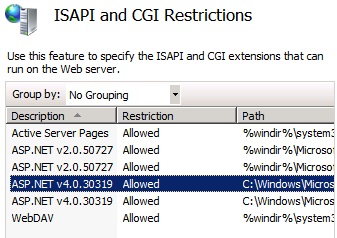

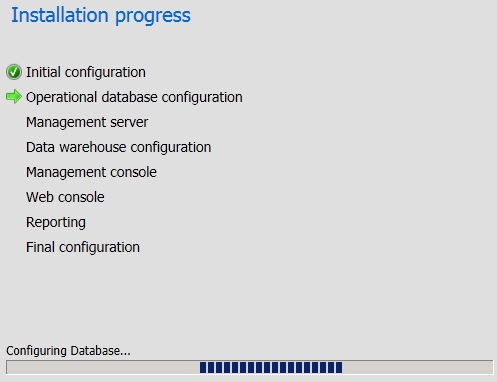

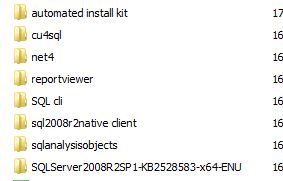

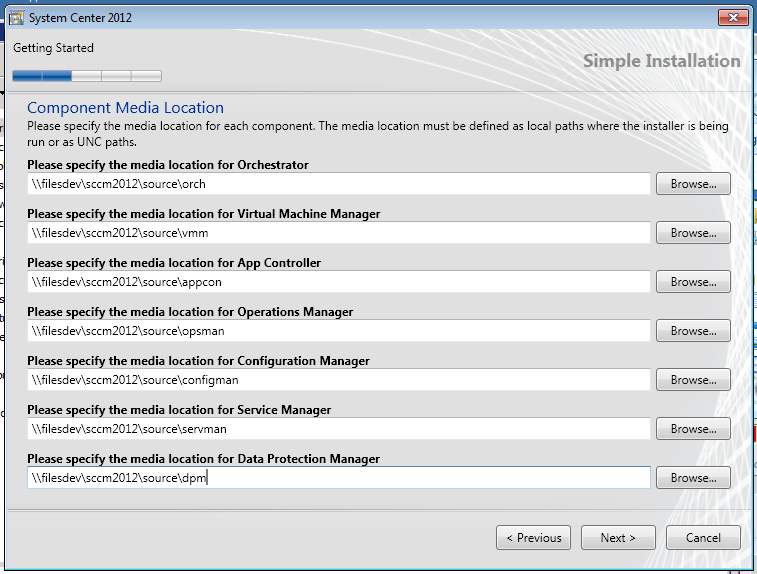

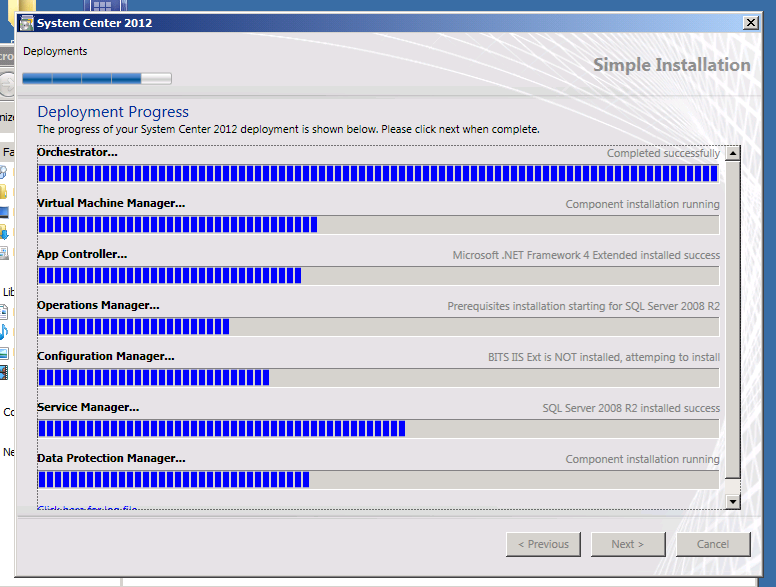

Unified installer

There is a unified installer which is great for quickly deploying the whole System Center Suite and you can read all about my experience here I also urge you to click through some of the categories above for more System Center related posts.