If you have a Virtual Host in Virtual Machine Manager that is not responding, and forcing a manual refresh returns an error like this:

Error (2910)

VMM does not have appropriate permissions to access the resource C:\Windows\system32\qmgr.dll on the server.

Access is denied (0x80070005)

It can often be remedied by one of the following: Re-install the VMM agent, restart the virtual machine manager agent and WMI services or restart the virtual host. It is also worth making sure your hosts are all up to date as well.

Occasionally I see a host where this doesn’t work and no matter what it, remains as “not responding” in VMM. For me the case appears to be a broken winrm configuration. You can be fooled into thinking winrm is setup correctly as a “winrm /quickconfig” returns as already setup, and the winrm service is running.

It looks like all the “winrm /quickconfig” command does is check that winrm has been enabled, it wont reset other possibly incorrect configurations or broken settings.

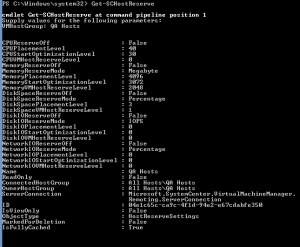

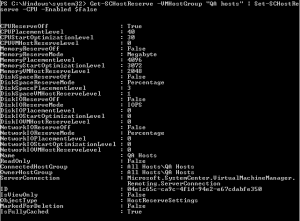

Comparing the winrm configuration and registry of a working identical host to a “not responding” host I have found the following commands will correct the deviated settings and usually results in a host that now responds to VMM.

reg add HKLM\SOFTWARE\Microsoft\Windows\CurrentVersion\Policies\System /v LocalAccountTokenFilterPolicy /t REG_DWORD /d 1 /f

winrm set winrm/config/service/auth @{CredSSP=”True”}

winrm set winrm/config/winrs @{AllowRemoteShellAccess=”True”}

winrm set winrm/config/winrs @{MaxMemoryPerShellMB=”2048″}

winrm set winrm/config/client @{TrustedHosts=”*”}

winrm set winrm/config/client/auth @{CredSSP=”True”}

Be sure to run these on the effected host in an admin command prompt.